- Welcome to Our Lab

- +61 7 3138 7195

- m1.haque[at]qut.edu.au

Automated Video Data Processing

Automated Video Data Processing

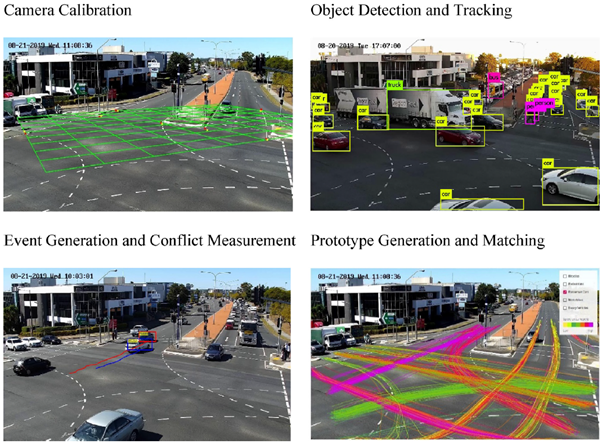

Traffic conflicts identified through automated video processing are often linked with crashes using methods to establish a correlation between non-crash and crashed based approaches. Raw video footage obtained from the intersections is processed using an Artificial Intelligence (AI)-based video analysis platform developed at the Queensland University of Technology. Automated Video Data Processing method involves six main procedures: camera calibration, object detection and tracking, prototype generation, prototype matching, event generation, and conflict identification.

The first step in this system includes camera calibration, which yields camera parameter estimates for positional analysis of road users. This step is required to translate the three-dimensional real world into two-dimensional image space in cameras. The next steps involved road user detection and tracking across frames. The approach used the YOLOv3 algorithm for object detection in the traffic scene and then the Deep-SORT algorithm to track all the road users (motorized as well as non-motorized) for greater efficiency in the object detection and tracking module. The extracted vehicle trajectories are then analyzed for estimating conflict indicators for conflict events of interest for further analysis.